What is an ASIC?

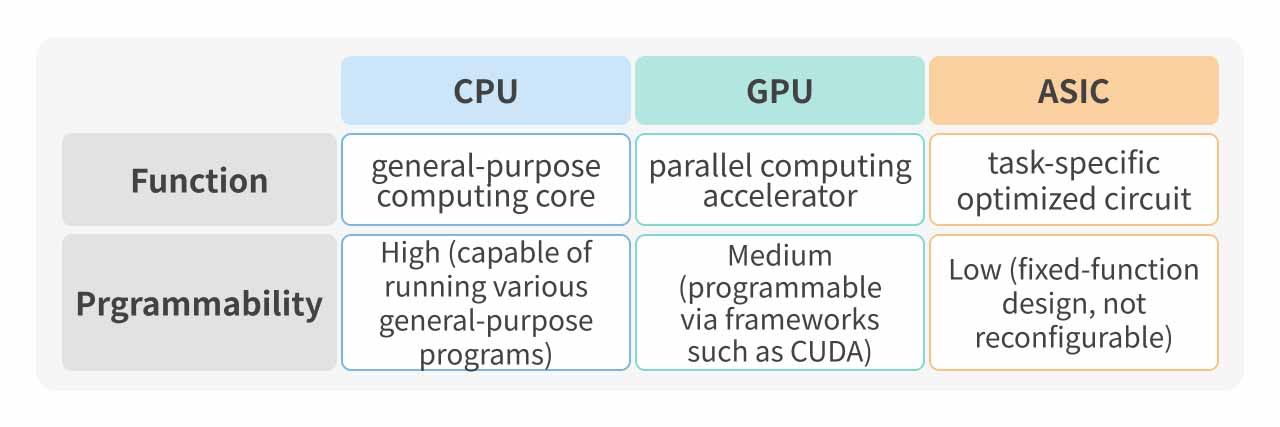

ASIC stands for Application Specific Integrated Circuit. Unlike CPUs (Central Processing Units), which are optimized for sequential instruction processing, and GPUs (Graphics Processing Units), which are designed with numerous cores ideal for parallel computing, ASICs are customized chips tailored for specific applications even before production. They offer high performance and energy efficiency, making them suitable for specialized tasks such as Bitcoin mining or AI model training and inference, but lack the flexibility of GPUs for general-purpose or graphical tasks.

The term "ASIC" dates back to 1967, when it was used to describe customized designs for specific products. ASICs became mainstream in the 1980s and 1990s. However, due to high development barriers, long development cycles, and significant Non-Recurring Engineering (NRE) costs, ASICs were gradually replaced by more flexible general-purpose chips after 2000. For example, NVIDIA’s GPUs are widely used for various computing tasks, reducing the market share of ASICs.

With the global surge in AI development, ASICs have returned to the spotlight. Below we will be exploring why these chips are critical in the AI era.

Business Models of ASIC

As mentioned, ASIC chips are custom-designed and manufactured for specific applications. IC design service providers play a key role in the ASIC development process by offering design services. These services generally follow two models: Non-Recurring Engineering (NRE) and Turn-key production:

1. NRE: One-Time Design Development Cost

NRE is the primary upfront cost in ASIC development, which includes:

- Circuit Design: Planning and designing circuits based on client requirements.

- Circuit Verification: Ensuring functionality and performance, preventing logic errors.

- Chip Prototyping: Producing small batches for performance validation.

Due to the custom nature of ASICs, NRE costs are typically high—especially for advanced nodes like 5nm and 3nm.

2. Turn-key: Production & Long-Term Revenue

Once design is completed, the chip enters the manufacturing and testing phase. IC design firms usually offer turn-key services, assisting clients with:

- Foundry production (e.g., TSMC)

- Packaging and testing (e.g., ASE Technology)

In this phase, design service providers take a margin from each produced chip as long-term revenue, ensuring profitability beyond initial NRE recovery.

ASIC Market Status and Outlook

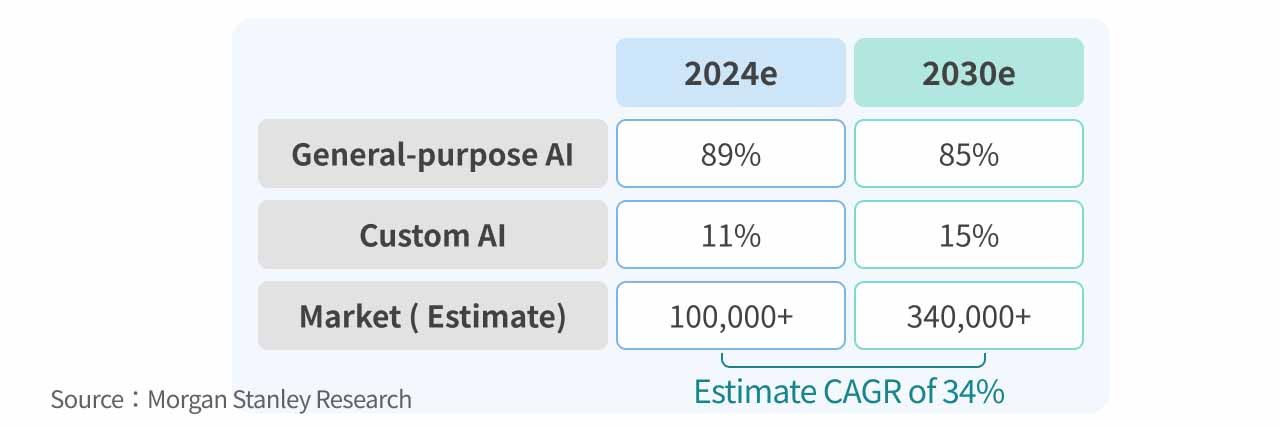

Morgan Stanley forecasts the AI ASIC market to grow from $12 billion in 2024 to $30 billion by 2027, with a compound annual growth rate (CAGR) of 34%.

Broadcom and Marvell dominate the market, accounting for over 60% of global ASIC supply.

Broadcom (AVGO)

Broadcom Inc. is a U.S.-based semiconductor and infrastructure software firm. Through major acquisitions such as VMware, Broadcom has expanded into two major areas: semiconductors and infrastructure software. Its products are widely used in data centers, networking, enterprise software, broadband, wireless, and storage solutions. Amid the AI boom, Ethernet products such as data center switches, routers, and ASICs have become core growth drivers.

In FY25 Q1, Broadcom posted $14.9 billion in total revenue, including $4.1 billion from AI-related businesses—a 77% YoY growth, exceeding market expectations of $3.8 billion. The firm also plans to expand AI R&D, and reported four new potential partners. Broadcom expects its addressable market from three hyperscale customers to reach $60–90 billion by 2027.

Marvell (MRVL)

Marvell Technology Inc. is a U.S.-based fabless IC design company. It has expanded aggressively into ASIC and data center markets, maintaining close partnerships with major cloud service providers (CSP). Its portfolio includes ASICs, electro-optics, Ethernet solutions, fibre channel adapters, processors, and storage controllers.

In FY25 Q4, Marvell reported $1.82 billion in revenue, with data center contributing 75.2%. For the full FY2025, revenue reached $5.77 billion. Despite surpassing its AI chip revenue target of $1.5 billion, overall results missed expectations due to weak growth across its five major segments (Data Center, Carrier Infrastructure, Enterprise Networking, Consumer, Automotive), resulting in an EPS of $1.58 (up 4.6%).

Looking to FY2026, while custom AI ASICs and silicon photonics will drive demand, Marvell faces short-term challenges due to a lack of new partnerships or major deals, which may dampen momentum in the next second half year.

Current ASIC Users (Cloud Providers)

| Cloud Provider | Chip Type | Supporting Company |

|---|---|---|

| TPU (Tensor Processing Unit) | Broadcom | |

| Amazon | Inferentia, Tranium | Alchip, Marvell |

| Meta | MTIA (Meta Training and Inference Accelerator) | Broadcom, Andes Technology |

| Microsoft | Maia, Cobalt | Global Unichip Corp. (GUC) |

As shown, leading cloud providers are actively developing ASICs for AI in collaboration with chip designers such as Broadcom and Alchip to accelerate chip development and production. This vertical integration helps reduce reliance on a single AI hardware vendor, such as NVIDIA.

Taiwan’s ASIC Supply Chain

1. Alchip (3661)

Alchip provides IC design services for ASICs in AI, HPC, and communications—HPC being the largest.

- 2025 revenue expected to remain flat or slightly increase, with NRE business growing to 20–30% of revenue.

- Amazon cycle ending; Intel becomes largest client in 2025 with Gaudi 3 AI accelerators (5nm).

- 2026 growth driven by Amazon's 3nm AI chip and 2/3nm project pipelines.

- AI ASIC demand is expected to drive revenue CAGR to 40%–50% from 2025 to 2027, with the market anticipating that Amazon’s Trainium 3 and Inferentia 3 will generate substantial revenue in 2026.

2. GUC (3443)

Global Unichip Corp (GUC) is Taiwan’s first SoC design service company, partially owned (35%) by TSMC.

- Revenue in 2024 is impacted by inventory adjustments in SSDs and consumer products, along with delays in AI projects to 2025, resulting in limited short-term growth.

- 2025 recovery from crypto 3nm project mass production and other 3–5nm initiatives.

- HBM3E IP adopted by major AI firms; working with Micron and SK Hynix on HBM4.

- Outlook for 2026: Revenue from cryptocurrency is expected to drop to zero, but AI chip projects from cloud service providers (CSPs) are set to grow. New AI projects with Google and Meta are anticipated to drive sustained double-digit revenue growth.

3. Faraday (3035)

A UMC Group subsidiary, Faraday was spun off from UMC’s IP and NRE departments. Faraday is an IC design service company under the UMC Group. Originally the IP and NRE division of UMC, it later became an independent entity. UMC currently holds a 13.8% stake. Faraday primarily focuses on ASIC design services and IP licensing, and ranks among the world’s top 15 SIP providers and top 50 ASIC design service companies.

- 2025 revenue forecasted to grow over 40% driven by mass production (MP), NRE, and IP.

- MP revenue growth: Driven by surging demand for advanced packaging projects, with a recovery also expected in mature-node MP production.

- NRE design business: Growth supported by increasing RFQs (Requests for Quotation); the company aims to secure over 10 advanced technology projects annually, most of which are expected to enter mass production in 2026, supporting long-term momentum.

- Q1 2025 revenue: Quarter-over-quarter growth exceeded 150% and year-over-year growth surpassed 180%, outperforming market expectations. However, inventory buildup for advanced packaging projects is expected to compress gross margin, which is projected at just 20–23% for Q1—significantly below the market estimate of 45.6%–46.4%.

- 2025 EPS forecast: Broker estimates put EPS at NT$6.02–7.51. Despite robust revenue growth, margin pressure remains a key challenge.

4. MediaTek (2454)

MediaTek is the fifth-largest IC design company in the world, primarily focused on smartphone chips, smart devices, and power management ICs. Its main competitors in smartphone chipsets include Qualcomm, Unisoc, and HiSilicon (Huawei). In the smart home and AIoT sectors, it competes with companies such as Broadcom, Marvell, Novatek, and Realtek.

- ASIC Business Development: MediaTek has established a dedicated team to serve cloud clients, with its ASIC projects expected to start contributing revenue in Q1–Q2 2026, projected to exceed $1 billion in scale.

- Flagship Smartphone Chips Gaining Market Share: The company’s smartphone business is growing faster than the global shipment rate, driving revenue growth.

- Wi-Fi 7 Business Expansion: As network standards upgrade, Wi-Fi 7 revenue is expected to double in 2025.

Will ASICs Challenge NVIDIA?

As the leader in GPUs, NVIDIA (NVDA) faces scrutiny over whether ASICs could challenge its dominance in AI—especially in LLM applications.

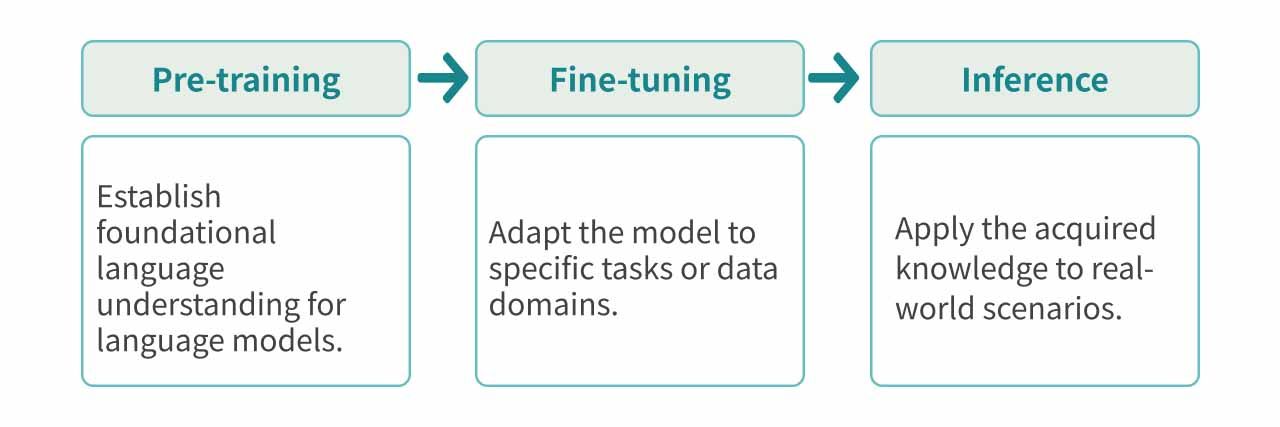

LLM involves two phases: Training and Inference.

Training

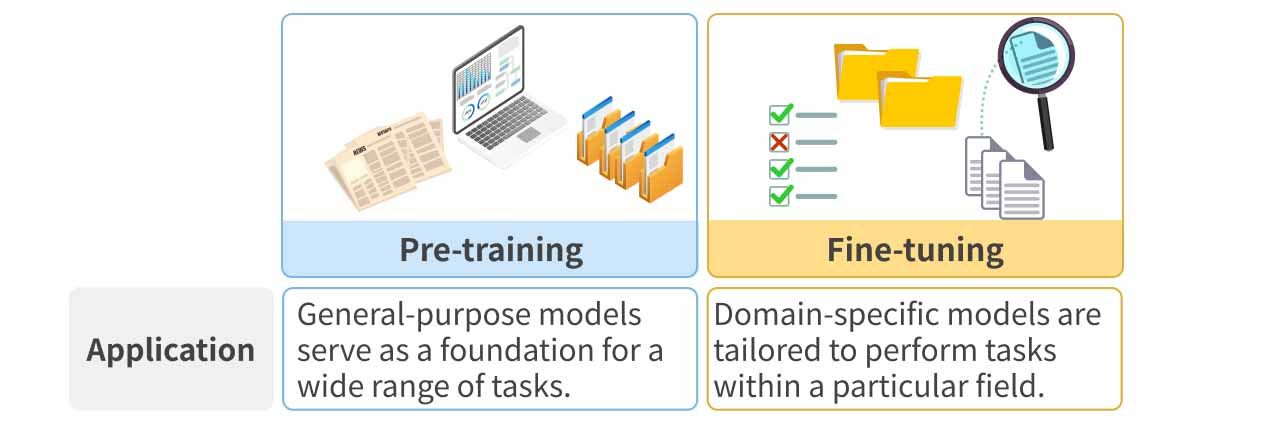

Training includes Pre-training and Fine-tuning stages.

Pre-training requires immense compute power, where GPUs excel due to support for high-precision formats (e.g., FP32) and NVIDIA’s rich CUDA ecosystem. ASICs are more specialized—Google’s TPU, for instance, is ideal for matrix-heavy models like BERT.

Intel’s oneAPI, with tools like DPC++, provides portability from CUDA, but adoption remains low due to CUDA’s entrenched ecosystem and developer familiarity.

Fine-tuning demands flexibility for varied tasks. While ASICs perform well in fixed scenarios, GPUs remain preferable for general fine-tuning applications.

Glossary:

Pre-training: This stage involves using large volumes of diverse, unlabeled data—such as web text, books, and academic papers—to build a foundational understanding of language. The parameters learned during this phase serve as the initial weights for a new neural network.

CUDA: A high-level programming language developed by NVIDIA, CUDA simplifies the development of code libraries and interfaces with NVIDIA GPUs. It supports rapid iterative development and allows for performance optimization through fine-tuning of low-level code (PTX). The foundational libraries are well-established, and the vast majority of production-grade software is built on CUDA.

Fine-tuning: This refers to further training of a pre-trained model on a new, labeled dataset. The model is enhanced and refined during this phase, which can involve adjusting the entire neural network or selectively updating certain layers while freezing others.

Inference

In inference, ASICs excel in optimizing for specific models—delivering lower latency and higher throughput than GPUs. With rising demand for real-time inference and reasoning models, ASIC adoption is set to grow.

Glossary:

Dynamic Inference: Data is processed by the model in real time, producing outputs and predictions immediately upon request based on user input. This requires low latency and fast data access to function effectively.

Reasoning Model: In addition to standard input-output tokens, reasoning models incorporate special reasoning tokens to break down and interpret the user's question. These models generate answers in a visible, step-by-step manner, discarding the reasoning tokens from the final context.

Conclusion

Thanks to high performance and low power consumption, ASIC chips are gaining traction in AI, cloud computing, 5G, and crypto sectors. As AI demands surge, ASICs are expected to see over 30% CAGR from 2024–2027 in cloud data center and accelerator markets.

While NVIDIA GPUs lead training due to CUDA and computing power, ASICs offer a complementary solution for inference, forming a hybrid ecosystem over time.

Broadcom and Marvell dominate globally, while Taiwan’s Alchip, GUC, and others partner with CSPs to develop AI ASICs and expand globally. With mass production projects set for 2026, the ASIC sector holds strong growth potential.