Business Model Overview

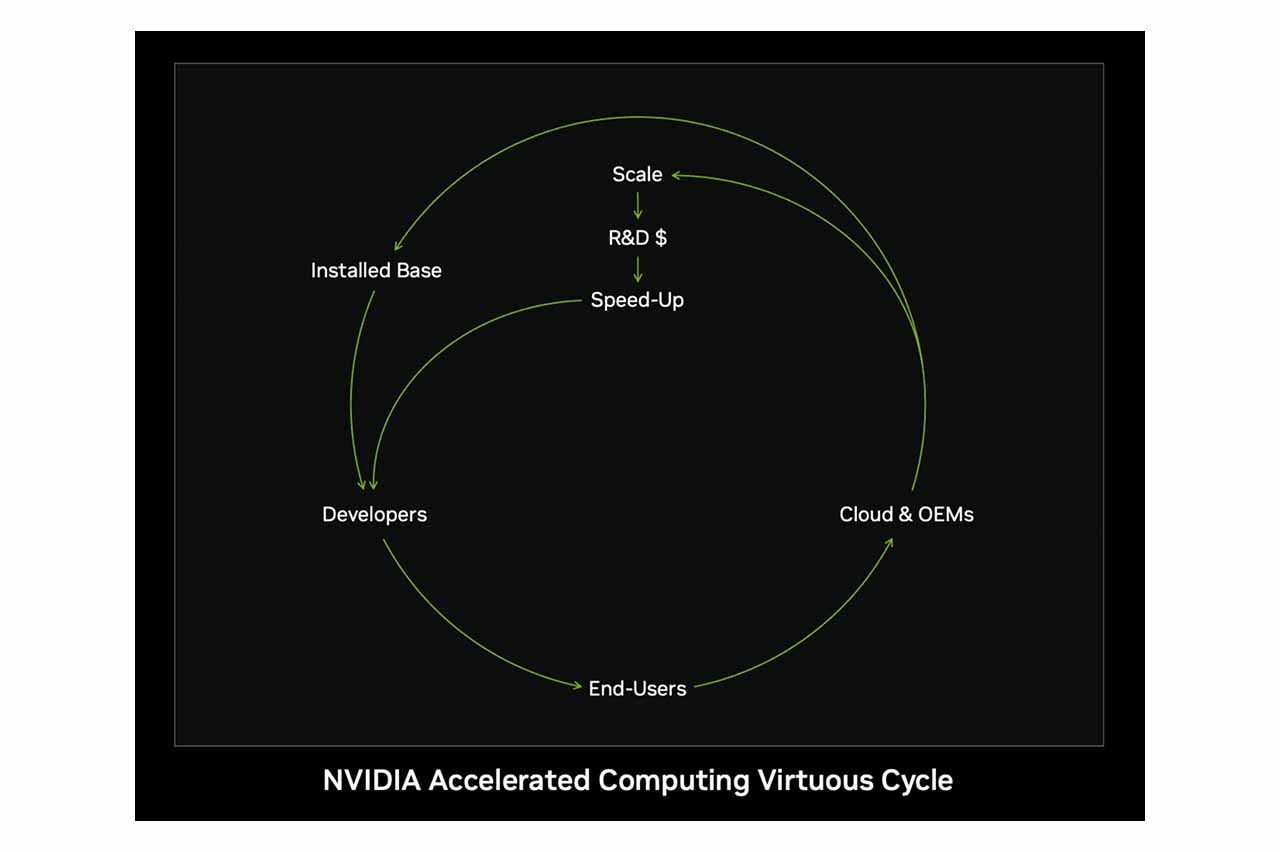

As a global leader in GPU solutions, NVIDIA’s success lies in its unique and robust business model, centered around a comprehensive ecosystem and effective commercial leverage.

Ecosystem

NVIDIA’s ecosystem is one of the foundational pillars of its competitive edge. Through its proprietary CUDA (Compute Unified Device Architecture) platform, NVIDIA has established an expansive and integrated software-hardware ecosystem.

What is CUDA? CUDA (Compute Unified Device Architecture) is a software-hardware integration technology that allows developers to use NVIDIA GPUs for computations beyond graphics processing. At the heart of NVIDIA’s ecosystem is the proprietary CUDA platform, aiming to build a complete hardware-software integrated ecosystem.

With CUDA at its core, NVIDIA has introduced numerous cutting-edge technologies and platforms that broaden its ecosystem across multiple development domains, creating a strong product moat. Below are several key platforms and technologies that NVIDIA has recently prioritized:

NVIDIA Drive

NVIDIA DRIVE is an end-to-end platform for autonomous driving, integrating embedded supercomputing hardware with software development tools to enable advanced AI capabilities in vehicles. This platform processes data in real-time from sensors like cameras, radar, and LiDAR, enabling safe and reliable autonomous driving.

On the hardware side, NVIDIA offers DRIVE Hyperion, a comprehensive reference architecture incorporating DRIVE AGX systems and a range of sensors. The latest addition, DRIVE Thor, built on the advanced Blackwell architecture, includes a Transformer engine optimized for inference, significantly enhancing the platform’s performance and stability. As the successor to DRIVE AGX Orin, DRIVE Thor showcases clear performance and technological advancements.

The NVIDIA DRIVE platform has been adopted by leading automotive manufacturers and technology firms worldwide, including Mercedes-Benz, Jaguar Land Rover, Volvo Cars, Hyundai, BYD, Polestar, and NIO.

NVIDIA DLSS

NVIDIA DLSS (Deep Learning Super Sampling) helps game developers enhance visual quality and frame rate performance using AI, delivering significantly improved user experiences.

The technology has been widely adopted by top game developers such as Epic Games, CD Projekt Red, and Activision. Additionally, NVIDIA collaborates with partners like ASUS, Gigabyte, and MSI to promote GeForce GPUs and expand its gaming market reach.

NVIDIA Jetson

NVIDIA Jetson is an embedded system designed for autonomous machines, offering high-performance AI computing for developers. It is widely used in robotics, drones, AGVs (Automated Guided Vehicles), smart retail, urban surveillance, industrial automation, and medical AI devices.

Examples include NEXCOM’s edge AI traffic computers, AAEON’s visual recognition systems, and LIPS' 3D camera solution integrated with the Jetson platform.

NVIDIA Maxine

NVIDIA Maxine is an AI software development kit (SDK) offering features such as video denoising, virtual background replacement, and facial expression reconstruction, aiming to enhance video communication quality and interactivity.

Companies such as Tencent Cloud, Looking Glass, and Pexip are already leveraging Maxine to improve cloud video services, 3D holographic video conferencing, and audio denoising, demonstrating its real-world versatility.

NVIDIA Omniverse

NVIDIA Omniverse is a real-time 3D design collaboration and simulation platform. It integrates various software tools and workflows, enabling designers, engineers, and creators to collaborate and build content across platforms.

Real-world applications include BMW Group’s digital twin factory, Pixar’s accelerated animation design, and Amazon Robotics’ simulated 3D environments for optimizing warehouse robots.

NVIDIA CUDA Quantum

NVIDIA CUDA Quantum is an open-source platform designed to integrate quantum and classical computing. It offers a high-performance development environment for designing, simulating, and running quantum algorithms using existing CPU and GPU infrastructure. The platform also includes Quantum Cloud, providing powerful cloud-based quantum computing services. Key partners include Xanadu, Pasqal, and Classiq, who are jointly advancing CUDA Quantum applications.

Source: NVIDIA Keynote at COMPUTEX 2023 (Livestream)

Source: NVIDIA Keynote at COMPUTEX 2023 (Livestream)

Promoting the Open-Source Ecosystem

NVIDIA actively promotes an open and collaborative ecosystem by partnering with global hardware and software companies, enabling it to rapidly penetrate vertical markets and implement its core technologies into practical solutions.

- Collaborates with tech giants such as AWS, Google, and Microsoft to advance cloud AI services.

- Partners with academic and research institutions to train future technical talent (e.g., Deep Learning Institute - DLI).

This holistic ecosystem strategy lowers customer switching costs and increases loyalty, building a competitive moat and solidifying NVIDIA’s market leadership.

Business Leverage

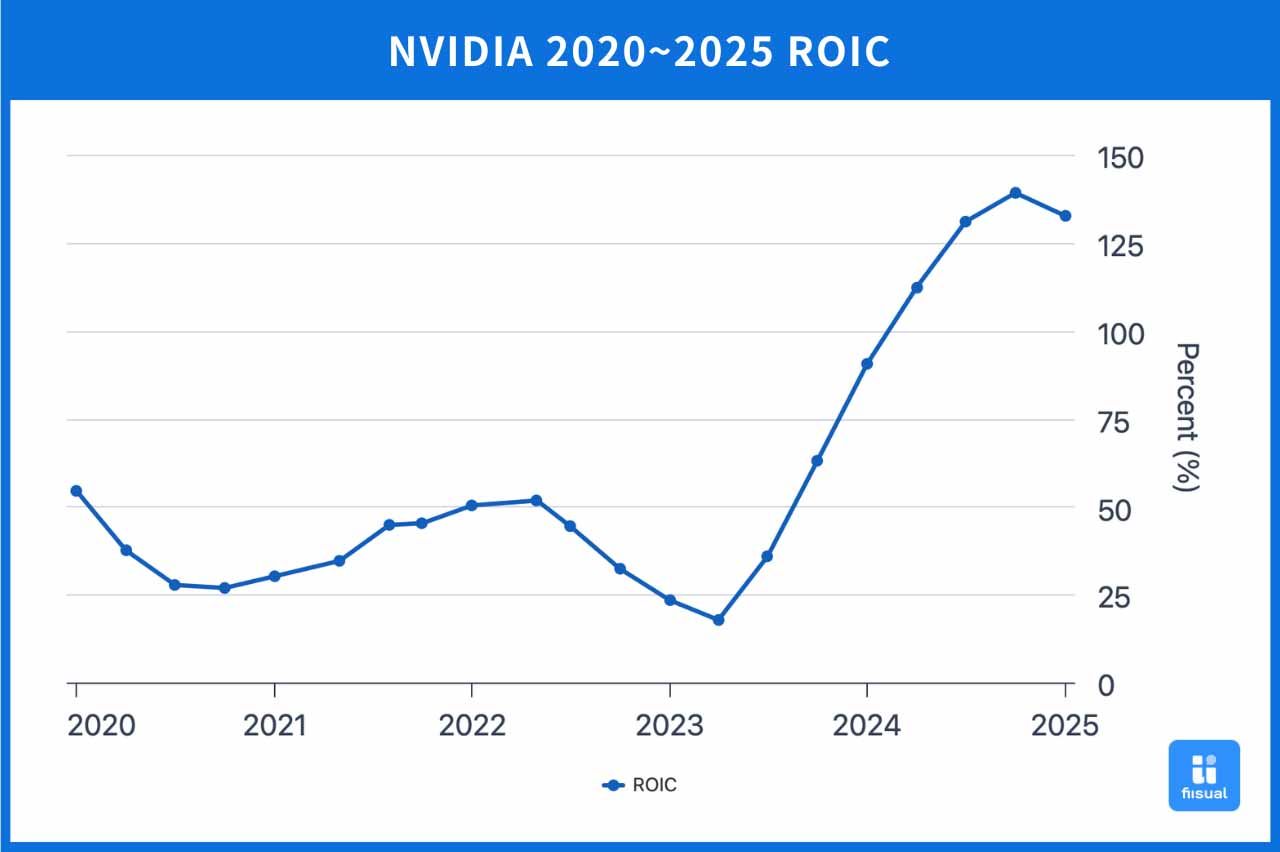

NVIDIA’s business leverage lies in its ability to apply core technologies across multiple markets, achieving economies of scale. For example, its GPU-based architecture not only serves gaming but also extends to AI computing, autonomous driving, VR, and data centers. This cross-market reuse maximizes return on invested capital (ROIC), lowers market entry risks, and distributes R&D costs, reducing unit cost and strengthening competitiveness.

Key vertical applications include:

- GeForce for gaming

- Quadro for professional graphics and productivity

- Iray for VR

- DRIVE for autonomous vehicles

- Hopper & Blackwell for AI and data centers

This leverage strategy allows NVIDIA to enter emerging markets quickly and diversify revenue. For example:

- GeForce, originally for gaming, has evolved into a base architecture for AI (Tesla/Blackwell) and autonomous driving (Drive series).

- Leveraging GPU parallel computing capabilities, NVIDIA became a leading provider of AI solutions during the deep learning boom.

In summary, NVIDIA continually strengthens its competitive edge and long-term value through a complete ecosystem and strategic leverage. As AI and autonomous tech mature, this model will drive further growth and market dominance.

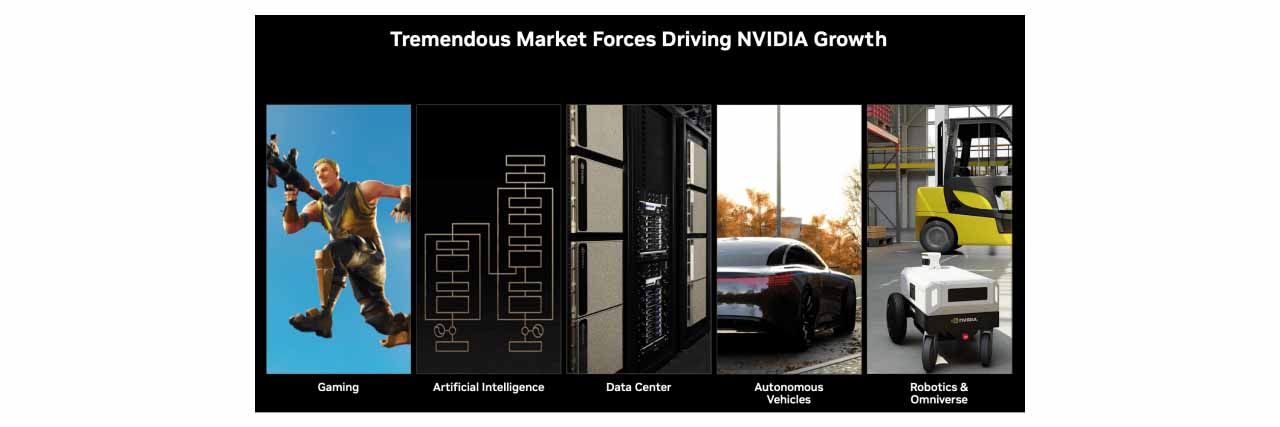

Product Line Introduction and Layout

NVIDIA has adopted a diversified product strategy to meet the needs of various markets. Its main product lines cover gaming, artificial intelligence (AI), data centers, and autonomous driving.

Gaming GPUs: GeForce Series

The GeForce series is NVIDIA’s GPU line tailored for gamers. With superior graphics rendering and computational performance, it enhances gameplay fluidity and visual quality. The latest GPUs such as the GeForce RTX 5090, 5080, and 5070 adopt the cutting-edge Blackwell architecture, featuring advanced Ray Tracing and DLSS 4, significantly improving visual realism and performance for AAA and high-resolution games.

AI GPUs: Hopper & Blackwell

Hopper Series

Hopper is designed for high-performance AI computing, ideal for deep learning training, high-performance computing (HPC), and enterprise AI inference. The flagship H200 GPU includes a Transformer Engine to greatly improve training and inference for models like GPT and BERT. While Hopper remains a mainstream choice for HPC and cloud AI, demand is gradually shifting toward the more powerful Blackwell architecture.

Key Features of Hopper Architecture:

- First-generation Transformer Engine: Accelerates training and inference for large language models (LLMs).

- 4th-gen NVLink: Enhances GPU interconnect bandwidth for large-scale AI workloads.

- HBM3e Memory: Offers higher bandwidth and energy-efficient performance.

Use Cases:

- HPC: Scientific computing, medical imaging.

- Data center AI: Cloud AI services, model training/inference.

- Enterprise AI: Voice recognition, image analysis.

While Hopper still plays a vital role, the release of Blackwell (B200, GB200) is redirecting demand toward more advanced AI and data center workloads.

Blackwell Series

Blackwell is NVIDIA’s latest GPU architecture designed for generative AI, LLMs, and large-scale AI training. The B200 GPU delivers up to 2.5× performance over H100 and supports HBM3e memory, NVLink 5.0, and reduced power and cost per computation. The GB200 superchip, combining B200 GPU + Grace CPU, targets major players like Google, Meta, and OpenAI for LLM training.

Core Products of the Blackwell Architecture:

1. B200 GPU

- Built using TSMC’s 4NP process, with 208 billion transistors.

- 2nd-gen Transformer Engine, optimized for LLMs and Mixture of Experts (MoE) models.

- Includes decompression engines and fast NVLink interconnect for shared memory up to 900 GB/s with Grace CPU.

2. GB200 AI Superchip

- Combines two B200 GPUs and one Grace CPU.

- Offers NVL36 and NVL72 configurations for enhanced data throughput and latency reduction.

- Optimized for massive models like GPT-4, Gemini 1.5.

Market Impact and Trends:

- High demand in large-scale AI computing.

- Replacing Hopper as the new AI computing core.

- Initial margin impact: Gross margin dropped from 75% to 73.4% in Q4 2025, expected to recover with scale.

- Strong growth potential as AI adoption rises, driving data center revenue.

Comparison Table: Hopper (H200) vs. Blackwell (GB200)

| Hopper (H200) | Blackwell (GB200) | |

|---|---|---|

| Process | TSMC 4N | TSMC 4NP (custom) |

| Target | Data Center & AI Training | Generative AI & Inference |

| Memory | 141 GB HBM3e, 4.8 TB/s | 13.5 TB HBM3e, 576 TB/s |

| Transformer Engine | 1st Gen | 2nd Gen, optimized for LLMs |

| NVLink | Gen 4 (600 GB/s) | Gen 5 (1.8 TB/s) |

| Performance Boost | ~1.9× over H100 | Up to 30× over H100 (inference) |

| Power Consumption | Max TDP 700W | Max TDP 1200W (NVL72) |

| Superchip | N/A | GB200 (Grace CPU + B200 GPU) |

| Use Case | HPC, data center, enterprise AI | Gen AI, LLMs, hyperscale AI |

Product Competitive Advantages

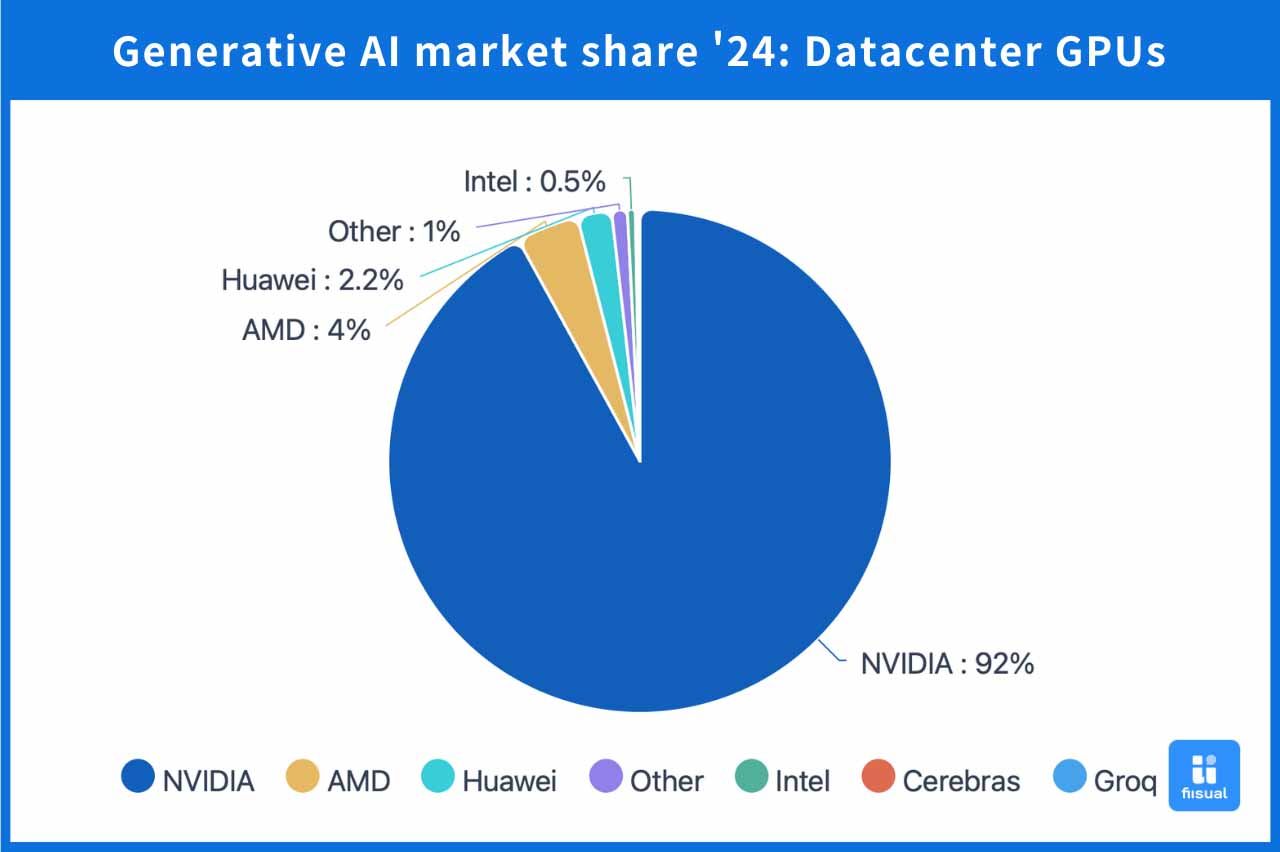

Dominant Market Share Driven by Ecosystem

NVIDIA holds a staggering 92% market share in the data center GPU segment. With the rapid advancement of AI, demand for computing chips is soaring. At the GTC conference, CEO Jensen Huang noted that the four major U.S. cloud service providers (CSPs) purchased 1.3 million Hopper GPUs last year and 3.6 million Blackwell GPUs this year—highlighting an enormous accumulation of computing capacity.

According to IoT Analytics, as of March 2025, NVIDIA dominates the data center GPU market with a 92% share, while AMD trails at 4%. NVIDIA’s dominance is largely attributed to the strength of its CUDA platform.

First launched in 2006, CUDA (Compute Unified Device Architecture) transformed GPUs from graphics processors into general-purpose computing powerhouses. Developers can program in C, C++, and more, using CUDA to offload massive computational workloads to GPUs while CPUs handle other tasks. This flexibility became a major driver behind the surge in AI workloads. Since CUDA is exclusive to NVIDIA GPUs, its ease of use and long-term development have built a loyal user base and formed a deep competitive moat.

Leading in Technology and Compute Power

AMD and Intel are NVIDIA’s key challengers in the semiconductor space. Here's a comparison of their latest chips:

| GB200 | MI300x | Gaudi 3 | |

|---|---|---|---|

| Company | NVIDIA | AMD | Intel |

| Architecture | Grace CPU + 2 Blackwell GPUs | Integrated CPU + GPU (CDNA 3) | Heterogeneous computing, not true CPU+GPU |

| Compute Power | High | Medium | Low |

| Estimated Price (USD) | $60,000–$70,000 | $14,813 | $15,650 |

| Price-to-Performance Ratio | Low | High | Medium |

| Unique Strengths | CUDA integration, AI/HPC optimized | High value, competes with Hopper | Cost-effective alternative |

While NVIDIA is more expensive, it remains ahead in performance and innovation. However, AMD’s MI300 series is gaining attention due to its exceptional value. At Computex 2024, CEO Lisa Su announced that the MI325x, to be released in 2025, will have 1.8× the memory and 1.3× the bandwidth of NVIDIA’s H200. AMD also plans to release MI350 and MI400, possibly surpassing NVIDIA’s Hopper series in performance at a lower cost. Additionally, AMD’s ROCm platform, being open-source, offers more flexibility compared to the closed CUDA system.

Another rising threat is ASICs (Application-Specific Integrated Circuits). These custom-designed chips are developed with IC leaders like Broadcom to meet specific client needs. ASICs are highly optimized, offering superior energy efficiency and cost control. While general-purpose GPUs remain essential for flexibility, ASICs provide an attractive complementary solution in the maturing compute market.

In the long term, as data centers focus more on cost control, price-to-performance will become a decisive factor. NVIDIA's future strategy must consider both ecosystem retention and pricing flexibility to stay ahead.

Product Updates: GTC Conference Highlights

Launch of Blackwell Ultra and Feynman Architecture

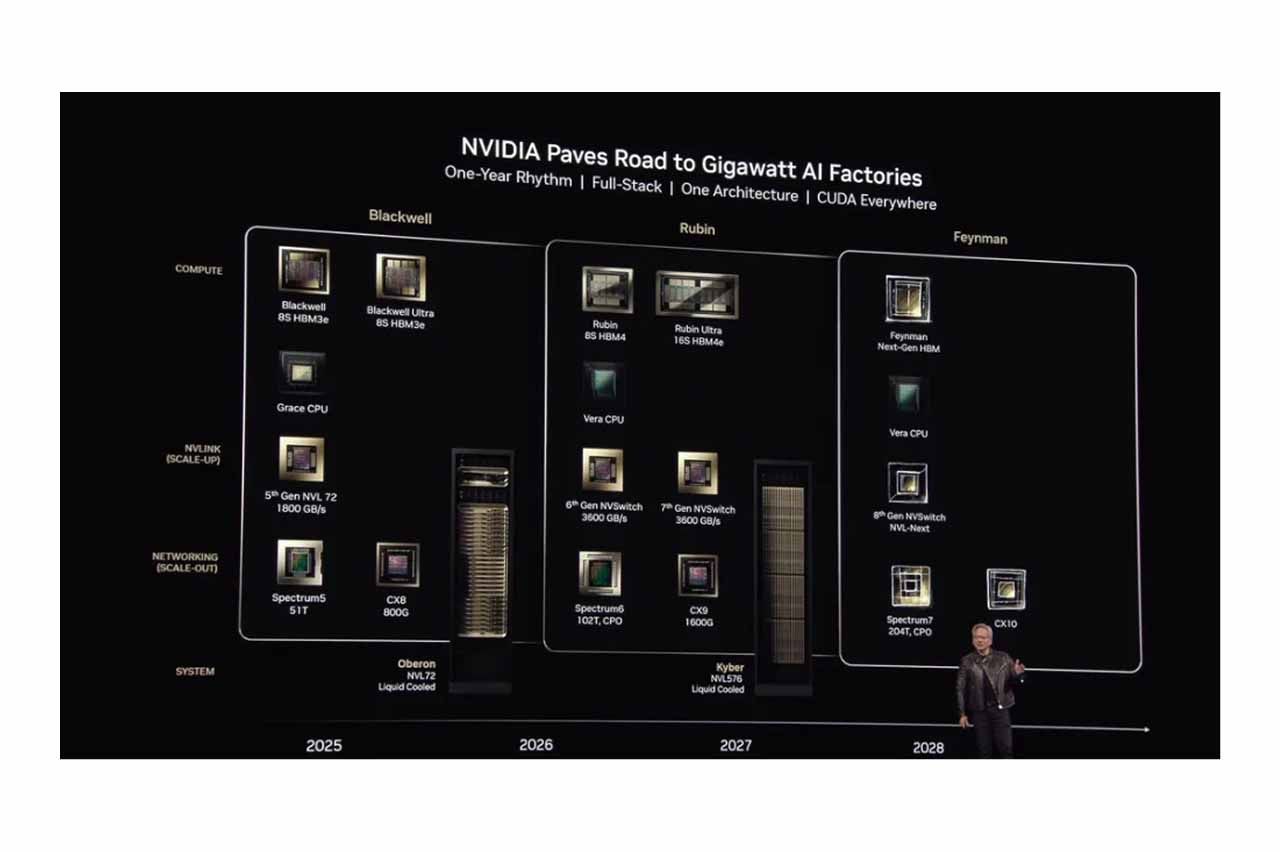

On March 19, Jensen Huang unveiled the Blackwell Ultra, designed for inference and agent AI, offering 1.5× the performance of current Blackwell chips and expected to launch later this year.

He also revealed the next-generation Rubin architecture arriving in 2026, which includes the Vera CPU, expected to deliver 2× the performance of Grace. And looking even further ahead, NVIDIA plans to launch an entirely new GPU architecture—Feynman—in 2028.

Additionally, NVIDIA introduced Dynamo, an open-source inference model that scales efficiently and reduces cost. The rapid product cycle and technological innovation are major advantages that help clients maximize the hardware-software synergy.

GB300 Development Update

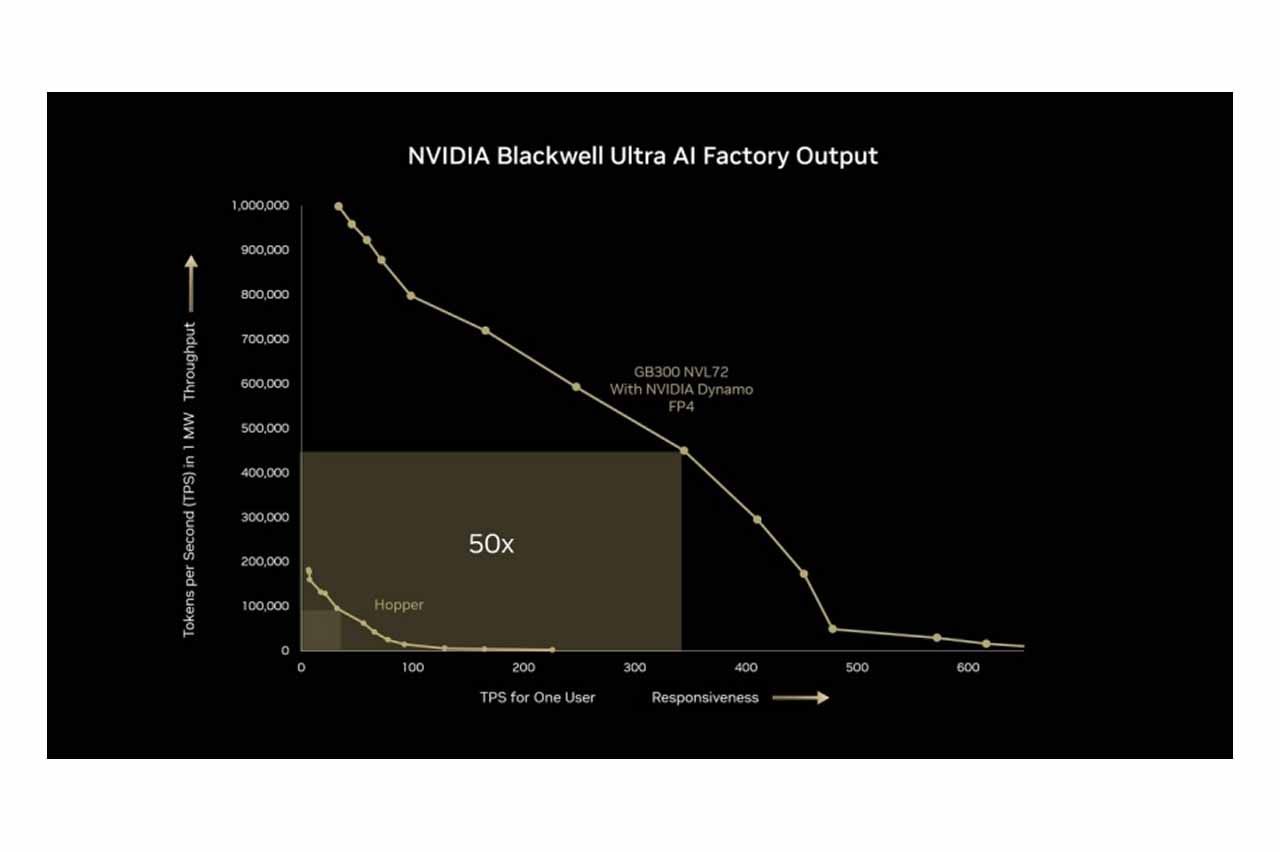

NVIDIA is set to launch the Blackwell Ultra NVL72 (GB300) rack platform in the second half of 2025. The GB300 will adopt SXM7 and Cordelia modules, replacing the Bianca motherboard. Each Cordelia module integrates 4 Blackwell B300 GPUs and 2 Grace CPUs, totaling 72 GPUs and 36 CPUs, while simplifying rack design and saving space.

This upgrade offers OEM/ODM partners greater integration flexibility and enables 10× faster inference response, 5× higher throughput, and 50× overall performance gains.

Conclusion

NVIDIA continues to play a central role in both gaming and data center markets. In the near term, few competitors can rival its position. However, with rapid technological evolution and a maturing market, future competition will increasingly revolve around cost-efficiency.

Key challenges for NVIDIA will be:

- Retaining users through its ecosystem and preventing migration to open platforms.

- Adjusting pricing strategies to stay competitive in a value-driven environment.

Despite uncertainties in downstream AI applications, NVIDIA is aggressively expanding into autonomous vehicles, robotics, and other emerging areas. By continuously redefining the AI landscape, it aims to sustain long-term demand.

For investors, continue monitoring real-world demand closely to avoid the risk of an AI bubble. NVIDIA’s sustained growth ultimately hinges on whether these AI applications can deliver consistent value at scale.